If you're interested in becoming a contributor or requesting changes then click here to join the discord

MEDUSA

MEDUSA© is a Python-based open-source software ecosystem to facilitate the creation of brain-computer interface (BCI) systems and neuroscience experiments [1]. The software boasts a range of features, including complete compatibility with lab streaming layer, a collection of ready-made examples for common BCI paradigms, extensive tutorials and documentation, an accessible online app marketplace, and a robust modular design, among others. This software has been developed by members of the Biomedical Engineering Group at the University of Valladolid, Spain.

Software architecture design

MEDUSA© comprises a modular design composed of three main independent entities:

- MEDUSA© Platform: the platform is a Python-based user interface for visualizing biosignals and conducting real-time experiments. Primarily built on PyQt, it offers straightforward installation via binaries or execution through the source code. Real-time visualization is fully customizable, encompassing temporal graphs, power spectral density graphs, as well as power-based and connectivity-based topoplots. By using an user management system, the platform allows users to install and develop apps directly linked to their accounts.

- MEDUSA© Kernel: the kernel stands as an independent PyPI package that encapsulates all classes and functions required to record and process the biosignals of the experiments. While employed by the MEDUSA© Platform for real-time processing, the kernel holds the versatility to be installed as a Python package in any local Python project. These encompass linear and non-linear temporal methods, spectral metrics, statistical analysis, as well as specialized algorithms for electroencephalogram (EEG) and magnetoencephalogram (MEG) data, alongside state-of-the-art processing algorithms to process many BCI control signals. A comprehensive list of the processing algorithms can be found in the documentation.

- App marketplace: the MEDUSA© website provides users with the capability to create and manage a profile within the ecosystem. Within the app marketplace, users can explore and download open-source apps or contribute their own creations. User-developed apps may be designated as public or private, with accessibility options for selected individuals. Presently, MEDUSA© offers a comprehensive range of BCI paradigms, encompassing code-modulated visual evoked potentials (c-VEP), P300-based spellers, motor imagery (MI), and neurofeedback (NF). Additionally, it includes a variety of cognitive psychology tests such as the Stroop task, Go/No-Go test, Dual N-back test, Corsi Block-Tapping test, and more.

Main features

The main features of MEDUSA© can be summarized as follows:

- Open-source: MEDUSA© is an open-source project, allowing users to freely access and modify the code to suit their research needs. Furthermore, the kernel can be used in any custom Python project to load biosignals recorded by MEDUSA© and/or to use the processing algorithms included in the ecosystem.

- Full Python-based: the platform is constructed entirely using Python, a high-level programming language. Applications such as BCI paradigms and neuroscience experiments can be developed within Python using PyQt, or in any other programming language by utilizing a built-in TCP/IP asynchronous protocol to communicate with the platform. Notably, many publicly available apps are developed in Unity. This choice was driven by specific requirements, such as precise synchronization between EEG and stimuli for paradigms like c-VEP, or to enhance user experience through visually appealing designs, as seen in MI and NF based apps.

- LSL compatible: MEDUSA© can record and process signals streamed through the lab streaming layer (LSL) protocol, making it compatible with a wide range of biomedical devices. This feature empowers users to engage in multimodal studies, enabling them to utilize an unlimited number of biosignals concurrently within the same experiment.

- Modular design: the software is composed of (1) the platform (user interface and signal management) and (2) the kernel (a PyPI package with processing functions and classes), providing a modular and scalable framework.

- Control signals: the ecosystem also includes pre-built examples for various BCI paradigms, such as P300, c-VEP, SMR, and NF applications, streamlining the development process for researchers.

Supported EEG-based BCI paradigms

MEDUSA© already supports most state-of-the-art noninvasive BCI paradigms utilized in scientific literature, covering both exogenous and endogenous control signals extracted from electroencephalography (EEG):

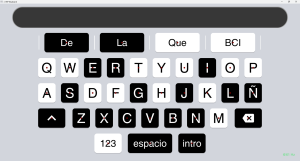

- Code-modulated visual evoked potentials (c-VEP): MEDUSA© stands as the only general-purpose system for developing BCIs that supports c-VEP paradigms. This exogenous control signal originates from the primary visual cortex (occipital cortex) when users focus on flashing commands following a pseudorandom sequence [2]. Different selection commands are encoded with distinct sequences, displaying minimal correlation between them. Typically, this is achieved by employing a time series with a flat autocorrelation profile (e.g., m-sequences) and encoding commands using temporally shifted versions of the original sequence. This approach, known as the circular shifting paradigm, requires a calibration to extract the brain's response to the original code (main template). Templates for the rest of commands are computed by temporally shifting the main template according to each command's lag. This enables online decoding by computing the correlation between the online response and the commands' templates [2]. It has been demonstrated that this approach is able to reach high performances (over 90%, 2-5 s per selection) with small calibration times (1-2 min) [2]. MEDUSA© incorporates several built-in apps utilizing this paradigm: the "c-VEP Speller," a speller that utilizes binary m-sequences (black & white flashes) for command encoding; or the "P-ary c-VEP Speller," which employs p-ary m-sequences encoded with different shades of grey (or custom colors) to alleviate visual fatigue [3].

- P300 evoked potentials: P300 are positive event-related potentials (ERP) that occur over centro-parietal locations approximately 300 ms after the presentation of an unexpected stimulus that requires attention or cognitive processing . P300s are commonly elicited using oddball paradigms, where sequences of repetitive stimuli are infrequently interrupted by a deviant stimulus [4]. This can be extended to provide real-time communication using noninvasive BCIs by employing the row-column paradigm (RCP). In this paradigm, a command matrix is presented to the users, with rows and columns randomly flashing. The user must attend to the desired command, generating a P300 component only when the row and column containing that command flash. By detecting the most likely row and column based on the decoding of these P300 components, the system can determine the target command in real-time [4]. MEDUSA© already implements several built-in apps for P300-based BCIs, such as the "RCP speller".

- Sensorimotor rhythms (SMR): SMRs are rhythmic oscillations of the brain activity over sensorimotor cortices. This rhythmic activity is mainly composed by μ (8-12 Hz), β (18-30 Hz) and γ (> 30 Hz) frequency bands [4]. SMR are characterized by a desynchronization of rhythms during motor behaviours, such as voluntary executed or imagined movements. This decrease is known as event-related desynchronization (ERD) and appears over the contralateral region providing a bilateral symmetry [4]. This characteristic pattern can be used to develop BCI applications in which user can control two-degrees-of-freedom systems by performing motor imagery (typically of upper limbs) [4]. SMR modulation training has also been proposed to restore motor function in post-stroke patients [5]. MEDUSA© provides a built-in app for SMR-based BCIs, which one includes classical machine learning algorithm for decoding user intention, common spatial Patterns (CSP) [4], as well as the state-of-the-art deep learning model EEGSym [6].

- Neurofeedback (NF): NF is a technique that aims to self-regulate certain patterns of one's own brain activity [7]. This can be achieved by providing real-time feedback related to these patterns. Through the provided feedback and operant conditioning, the user can find cognitive strategies to reach a mental state related to the desired brain activity pattern. This learning process is believed to induce brain plasticity, reinforcing the neural networks involved in the trained brain activity pattern and leading to an improvement in the cognitive functions associated with these neural networks [7]. NFs have been proposed for several clinical applications, such as the treatment of major depressive disorder, attention deficit hyperactivity disorder as well as for the improvement of cognitive functions in healthy individuals [8]. MEDUSA© implements ITACA Neurofeedback training, an app designed for conducting NF training studies [1]. It includes three different training scenarios with a gamified design and training metrics based on both power and connectivity to provide feedback on different brain patterns [9].

Supported cognitive psychology tests

Cognitive psychology tests allow to evaluate different cognitive functions and to obtain a quantitative measure of their state. MEDUSA© implements several computerized versions of classic tests that allow recording biosignals and user responses at the same time. This makes it possible to carry out interesting cognitive research studies.

- Corsi Block-Tapping Test: This test assesses visuo-spatial short-term working memory. It involves mimicking a sequence of up to nine blocks. The sequence starts out simple and becomes more complex until the user’s performance suffers [9]. The version provided by MEDUSA© includes both a "forward" (repeating the sequence in the same order in which it was presented) and a "backward" (repeating the sequence in reverse order) modes.

- Dual N-Back: The N-back task is a continuous performance task that is commonly used to measure central executive function of working memory. The user is presented with a sequence of stimuli and the task is to indicate when the current stimulus matches the stimulus from N steps earlier in the sequence [9]. On the other hand, the dual-task paradigm presents two independent sequences simultaneously, using both auditory and visual modalities. The MEDUSA© implementation of this test allows performing either the visual, auditory or dual modality. It also allows to adjust the load factor N and to perform the test in both Spanish and English.

- Digit Span: Memory span is the longest list of items that a person can repeat back in correct order immediately after presentation. It is a common measure of working memory and short-term memory. In this test, users are presented with a sequence of numerical digits and are tasked to recall the sequence correctly, with increasingly longer sequences being tested in each trial [9]. MEDUSA© implements a computerized version that allows the task to be performed in both forward and backward modes.

- Emotional continuous performance test (ECPT): The ECPT test is an standardize evaluation tool designed to assess and measure an individual's attentional abilities and, to a lesser extent, their response inhibition or disinhibition as part of executive control. The ECPT task involves three different stimuli: angry faces, happy faces and neutral faces with an artificial sound. Each trial consists of two stimuli according to the following pairs: angry-angry, angry-happy, happy-happy, and happy-neutral. The app implemented in MEDUSA© provides a convenient and efficient means of administering the test in various settings, such as research studies, clinical assessments, and educational environments.

- Go/No-Go test: A go/no go test is a two-step verification process that uses two boundary conditions, or a binary classification. The test is passed only when the go condition has been met and the no-go condition has been failed. In psychology, go/no-go tests are used to measure a user’s capacity for sustained attention and response control [9]. The app available at MEDUSA© allows extensive configuration of the parameters of this test.

- Oddball Paradigm: The oddball paradigm is a technique used in evoked potential research in which visual or auditory stimuli are used to assess the neural reactions to unpredictable but recognizable events [10]. The subject is asked to react either by counting or by button pressing incidences of target stimuli that are hidden as rare occurrences amongst a series of more common stimuli, that often require no response. It has been found that an evoked research potential across the parieto-central area of the skull that is usually around 300 ms and called P300 is larger after the target stimulus. Two apps are available at MEDUSA©: a visual & auditory oddball paradigm, an a visual oddball task with real-time monitoring of the ERP for demonstration purposes.

- Stroop task: Based on Stroop effect, which is the delay in reaction time between congruent and incongruent stimulus, this test measures selective attention [9]. It presents different color names that may or may not match the color in which they are printed. Users are encouraged to respond to the color of the ford by pressing the corresponding key. The version implemented by MEDUSA© allows the test to performed in both Spanish or English.

Links

Website LinkedIn GitHub Twitter YouTube

- ↑ Santamaría-Vázquez, E., Martínez-Cagigal, V., Marcos-Martínez, D., Rodríguez-González, V., Pérez-Velasco, S., Moreno-Calderón, S., & Hornero, R. (2023). MEDUSA©: A novel Python-based software ecosystem to accelerate brain-computer interface and cognitive neuroscience research. Computer methods and programs in biomedicine, 230, 107357, DOI: https://doi.org/10.1016/j.cmpb.2023.107357

- ↑ Jump up to: 2.0 2.1 2.2 Martínez-Cagigal, V., Thielen, J., Santamaria-Vazquez, E., Pérez-Velasco, S., Desain, P., & Hornero, R. (2021). Brain–computer interfaces based on code-modulated visual evoked potentials (c-VEP): a literature review. Journal of Neural Engineering, 18(6), 061002, DOI: https://doi.org/10.1088/1741-2552/ac38cf

- ↑ Martínez-Cagigal, V., Santamaría-Vázquez, E., Pérez-Velasco, S., Marcos-Martínez, D., Moreno-Calderón, S., & Hornero, R. (2023). Non-binary m-sequences for more comfortable brain–computer interfaces based on c-VEPs. Expert Systems with Applications, 232, 120815, DOI: https://doi.org/10.1016/j.eswa.2023.120815

- ↑ Jump up to: 4.0 4.1 4.2 4.3 4.4 4.5 Wolpaw, Jonathan, and Elizabeth Winter Wolpaw (eds), Brain–Computer Interfaces: Principles and Practice (2012; online edn, Oxford Academic, 24 May 2012), DOI: https://doi.org/10.1093/acprof:oso/9780195388855.001.0001

- ↑ Sebastián-Romagosa, M., Cho, W., Ortner, R., Murovec, N., Von Oertzen, T., Kamada, K., ... & Guger, C. (2020). Brain computer interface treatment for motor rehabilitation of upper extremity of stroke patients—a feasibility study. Frontiers in Neuroscience, 14, 591435.

- ↑ Pérez-Velasco, S., Santamaría-Vázquez, E., Martínez-Cagigal, V., Marcos-Martínez, D., & Hornero, R. (2022). EEGSym: Overcoming inter-subject variability in motor imagery based BCIs with deep learning. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 30, 1766-1775.

- ↑ Jump up to: 7.0 7.1 Sitaram, R., Ros, T., Stoeckel, L., Haller, S., Scharnowski, F., Lewis-Peacock, J., ... & Sulzer, J. (2017). Closed-loop brain training: the science of neurofeedback. Nature Reviews Neuroscience, 18(2), 86-100.

- ↑ Ros, T., J. Baars, B., Lanius, R. A., & Vuilleumier, P. (2014). Tuning pathological brain oscillations with neurofeedback: a systems neuroscience framework. Frontiers in human neuroscience, 8, 1008.

- ↑ Jump up to: 9.0 9.1 9.2 9.3 9.4 9.5 Marcos-Martínez, D., Santamaría-Vázquez, E., Martínez-Cagigal, V., Pérez-Velasco, S., Rodríguez-González, V., Martín-Fernández, A., ... & Hornero, R. (2023). ITACA: An open-source framework for Neurofeedback based on Brain–Computer Interfaces. Computers in Biology and Medicine, 160, 107011.

- ↑ García-Larrea, L., Lukaszewicz, A. C., & Mauguiére, F. (1992). Revisiting the oddball paradigm. Non-target vs neutral stimuli and the evaluation of ERP attentional effects. Neuropsychologia, 30(8), 723-741.